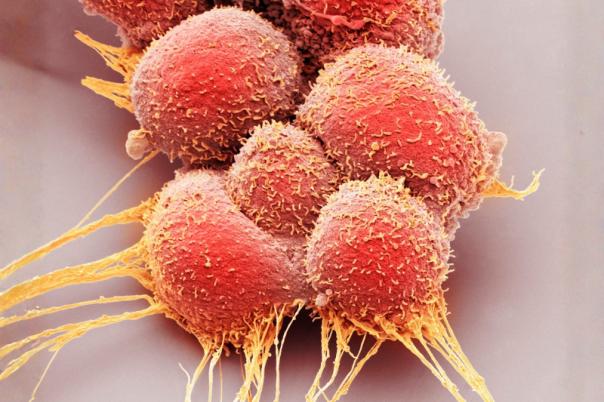

Artificial Intelligence (AI) is playing a significant role in accelerating the development of more effective and targeted medicines due to its advanced ability to predict antibody behaviour, optimise antibody design, accelerate discovery and drug development, and enhance therapies and diagnostics.

With the growing emphasis on AI redefining every aspect of biomedical innovation, protein and antibody engineering is emerging as one of its most promising applications. But what does this transformation actually look like in practice? Our June online Thought Leadership ‘Smart Molecules – Harnessing AI and Data to Advance Antibody & Protein Engineering’ offers a masterclass in exploring how AI and data are accelerating antibody and protein engineering.

This online Thought Leadership, available to watch now on our NextGen Biomed community portal, features expert insights across academia and industry from Rahmad Akbar (Senior Data Scientist at Novo Nordisk), Talip Ucar (Senior Director in AstraZeneca), Esmaiel Jabbari (Professor of Chemical and Biomedical Engineering at University of South Carolina), and Thomas Kraft (Principal Scientist at Roche).

Keep reading for some of the key insights and takeaways from this forward-thinking session exploring how artificial intelligence and data-driven strategies are transforming the landscape of antibody and protein engineering.

“If you haven’t tried AI for antibody design - please try it. The technology is maturing. And if you’re not ready to adopt this, then you might be left behind.”

— Rahmad Akbar, Novo Nordisk

Let’s firstly dive into our exclusive interview with Rahmad Akbar, who brings deep expertise in the intersection of data science and antibody design. Akbar reflected on the shift of his academic focus - establishing proof of principles for generative antibody design and proof of principles of antibody and antigen binding prediction – to his role at Novo Nordisk, centred on operationalising proof of principles. Data and AI are at the heart of Novo Nordisk’s research strategy, deeply integrated with internal, legacy, and external data sources as well a robust machine learning layer. AI is not viewed as an add-on but as a foundational component of their research architecture.

“We're not treating AI and data separately, it's more like they are a package deal. You cannot do one without the other.”

Unlike traditional high-throughput screening, which Akbar likens to “relying on chance,” AI enables a more efficient design, build, test and learn cycle. Novo Nordisk now designs and tests smaller, data-enriched plates - prioritising expression and developability before investing in wet lab validation. A recurring theme throughout the discussion was prediction bottlenecks. While expression and developability are increasingly predictable, binding affinity - especially for flexible molecules like antibodies - remains a complex challenge. Prediction of binding, according to Rahmad “is the hardest problem to solve.”

Looking ahead, Akbar envisions a future where in silico prediction becomes the workhorse of drug design, with the wet lab functioning primarily on a quality control level. This transformation would mark a major shift in how pharma companies conceptualise and invest in early discovery. Achieving consistent success across targets - not just isolated proof-of-concept cases - is essential for democratising AI-powered design.

Opening the discussion to our expert panellists, one of the most widely agreed-on themes was the central role of structured, high-quality data. Talip Ucar discussed AstraZeneca’s approach to rebuilding its data pipelines and infrastructure to support scalable AI adoption.

“If you want to indicate AI through our processes in a very good manner, you have to start almost from scratch - generate new data, with the new policy, with the new established standards.”

Ucar also shared interesting insights on AstraZeneca’s successful participation in the first phase of a federated learning initiative with other Big Pharma companies - a method that allows multiple organisations to train machine learning models collaboratively, without exposing raw data.

Thomas Kraft highlighted that a key challenge with machine learning models is insufficient data. He endorsed gradient boosted decision trees (for large language models or classic deep learning) and intermediate in vitro models (to predict human data) as practical solutions. Kraft also described Roche’s use of tool compounds “to sample a certain biophysical space, not with the intention of actually being for a specific project, but for learning.” These compounds, by design, are not project-specific, can be used to fill gaps in datasets, and allow for safe sharing and collaboration between pharma and academic teams.

From an academic viewpoint, Professor Jabbari describes how his lab integrates molecular simulations with machine learning to study and predict the structure of short peptides - often poorly represented in public databases. He emphasised that AI models can only be as powerful as the context they’re given, explaining that the conditions under which a protein was expressed or purified can influence outcomes dramatically and must be captured alongside the data to train reliable models.

The discussion concluded with a clear message - AI is transforming discovery - data quality, completeness, and context are more important than ever – and federated learning is an effective tool for secure, collaborative progress.

There is a significant momentum in harnessing and converting the transformative impact of AI and data-driven approaches to advance antibody and protein engineering. For organisations hoping to stay competitive, investing in AI, data infrastructure, and collaborative models appears to be imperative. While challenges around data quality, model interpretation, and integration remain, the tools are maturing rapidly, and the benefits - speed, accuracy, and scalability - are too substantial to ignore.